思路

- 首先就是仔细看反馈表中的问题,有些报回来的bug是没问题的,只是测试那边不了解业务流程,这种说明一下情况基本就解决了;

- 然后就是真的有问题的bug,先进行bug复现,先看看本地服务器会不会有这bug,如果本地服务器也报这个bug,那就是确实又问题了,这样就开始debug。如果本地服务器没问题,额这种情况就要远程连接医院那边的电脑进行bug排查。

- 先看报错的语句,复制到源码看看能不能找到,如果不能找到的话可能是前端的问题,报的前端的错误,也可能是本地服务器的权限中心字段名没对应上,也就是权限中心没配置好,这时就要找对应的负责人沟通了;如果能源码找到跑错的语句才开始真正的bug排查。

- 开始bug排查,f12打开浏览器控制台,复现bug查看控制台信息看是调用了哪个接口,再看消毒供应室的源码找到对应的rest,再找service,mapper,一步一步深入看看是那个部分哪里出错了。

- 如果按4这样粗略地排查看不出来的话就要看日志了,连接上本地服务器,命令行输入 tail -300f log日志文件 ,然后复现报bug的操作,拿到日志信息看具体是哪里有问题了,然后用swagger或者postman发送信息在本地环境debug一步一步排查,这种这么仔细一般都能解决大部分bug了。

举个栗子

比如我遇到了一个事务无限回滚的问题,这就的的确确是个大问题,先在本地环境尝试着能不能复现,在谷歌浏览器中按F12,查看回滚那一个操作到底干了些啥

比如上图url为:

http://10.0.1.117:10079/ssd/v1.0/flow/sterilize/batch/qt

http://10.0.1.117:10079是服务器,本地就是localhost

/ssd/v1.0是项目路径(server.context-path)。

如果server.context-path没有配,请求的url地址就是localhost : port/flow/function;

如果server.context-path = “/ssd/v1.0”, 请求的url地址就是localhost : port/ssd/v1.0/flow/function

/flow/sterilize/batch/qt才是我们在源码要找的部分

然后就去源码rest里找啦,一步一步找到rest.java文件,例如:

如果找的过程中卡住了或者找不到的话就直接快捷键 ctrl + shift + f 全局搜索。

找到@RequestMapping,发现value跟我们要找的url末尾部分完全一致,就是找对地方啦(有些目录要分两三段找,反正就是跟文件夹差不多)

然后从rest->service->mapper的顺序过一过流程看看有没有什么错误,嗯,说实话一般都看不出来,这时候就要靠强大的log日志帮我们精确定位问题所在了。

一般项目都会有日志导出的文件,用tail -100f “日志文件”找到最近100条日志信息,来看看它到底是哪里出了问题。出问题的日志如下:

Creating a new SqlSession

Registering transaction synchronization for SqlSession [org.apache.ibatis.session.defaults.DefaultSqlSession@f842db6]

JDBC Connection [com.mysql.cj.jdbc.ConnectionImpl@7b15ae54] will be managed by Spring

==> Preparing: select a.id as "id", a.define_id as "defineId", a.status as "status", a.dept_id as "deptId", a.name as "name", a.warehouse_status as "warehouseStatus", a.invalid_time as "invalidTime", a.valid_time as "validTime", a.action as "action", c.expected_time as "expectedTime" from t_flow_packet_instacne a left join t_flow_sterilize_batch_instance b on a.id = b.packet_instance_id left join t_flow_sterilize_batch c on b.sterilize_batch_id = c.id where a.delete_flag = 0 and c.expected_time < now() and a.status = ? and a.warehouse_status = ?

==> Parameters: 1(String), 1(String)

[2020-12-31 10:54:54.499][DEBUG][http-nio-10081-exec-3][10.0.1.117][245bacd8987a4fa383a0ba6ecfb414ec][192.168.8.98][org.springframework.web.client.HttpMessageConverterExtractor..][] "Reading to [cn.wisefly.auth.rpc.bean.AuthCommonResult>]"

[2020-12-31 10:54:54.500][INFO ][http-nio-10081-exec-3][10.0.1.117][245bacd8987a4fa383a0ba6ecfb414ec][192.168.8.98][cn.wisefly.ssd.core.busi.RestAuthorizationBusi.checkAuthorization.68][checkAuthorization] "intercepter current thread:27,http-nio-10081-exec-3"

Creating a new SqlSession

SqlSession [org.apache.ibatis.session.defaults.DefaultSqlSession@43c921b8] was not registered for synchronization because synchronization is not active

[2020-12-31 10:54:54.500][DEBUG][http-nio-10081-exec-3][10.0.1.117][245bacd8987a4fa383a0ba6ecfb414ec][192.168.8.98][org.springframework.jdbc.datasource.DataSourceUtils..][] "Fetching JDBC Connection from DataSource" SqlSession was not registered for synchronization because synchronization is not active,很显然,出问题的sql语句就是这段话前一个sql了。

竟然都找到出问题的sql语句了,就去数据库调试sql了,这个栗子为什么会无限回滚是因为一个实例对应多条数据,而读每个实例对应的数据时都只读一条,而且还是最老的数据,因为有定时过期灭菌检测,最老的数据不管怎么操作都是过期的,所以才出现无限回滚的情况。

解决方法是我们去读取最新的数据。原本sql语句为:

select a.id as "id", a.define_id as "defineId", a.status as "status",

a.dept_id as "deptId", a.name as "name", a.warehouse_status as "warehouseStatus",

a.invalid_time as "invalidTime", a.valid_time as "validTime",

a.action as "action", c.expected_time as "expectedTime"

from t_flow_packet_instacne a

left join t_flow_sterilize_batch_instance b on a.id = b.packet_instance_id

left join t_flow_sterilize_batch c on b.sterilize_batch_id = c.id

where a.delete_flag = 0

and c.expected_time < now()

and a.status = 1

and a.warehouse_status = 1 修改了下,加了个where判断:

select a.id as "id", a.define_id as "defineId", a.status as "status",

a.dept_id as "deptId", a.name as "name", a.warehouse_status as "warehouseStatus",

a.invalid_time as "invalidTime", a.valid_time as "validTime",

a.action as "action", c.expected_time as "expectedTime"

from t_flow_packet_instacne a

left join t_flow_sterilize_batch_instance b on a.id = b.packet_instance_id

left join t_flow_sterilize_batch c on b.sterilize_batch_id = c.id

where a.delete_flag = 0

and c.expected_time < now()

and a.status = 1

and a.warehouse_status = 1

and b.last_time = (select max(b.last_time)

from t_flow_packet_instacne a

left join t_flow_sterilize_batch_instance b

on a.id = b.packet_instance_id )其实就是让它选择查找最新的数据。

就这样这个结合业务的bug就解决了!!!只是改了下sql语句而已,主要是定位问题,所以定位bug需要花些功夫。我还走了一边debug的流程,加断点,一步一步看参数,结果根本发现不了问题,因为我那时定位的地方不对,走了好几遍debug都没看出啥问题,结果原来是定时任务检测回滚了数据。

栗子后续

处理完上一个bug后,走测试时发现了一个新bug,就是更新数据同一个实例会更新出多条数据展示出来,这也是一个实例对应多条数据的问题,然后我去看数据库实例所对应的表的时候发现实例都是唯一的,说明问题还是出现在一对多的问题上。

Bug:更新实例时会刷新出该实例对应的多条数据。

目标:我们想让实例展示唯一一条数据,而且还是最新的一条数据。

跟上一个栗子操作差不多,先去F12看后台到底调用了哪个接口

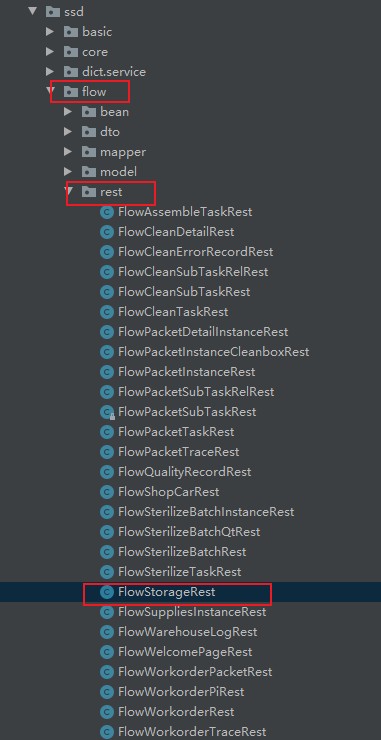

然后找到对应的rest:

对应的接口代码:

rest层(FlowStorageRest)

@GetMapping(value = "/s")

public ResponsePacket<PageResponseData<TFlowPacketInstance>> list(HttpServletRequest request) {

Page page = PageUtils.packagingPage(request);

Condition condition = new Condition(request);

if (condition.get("_sort") == null && condition.get("_order") == null) {

condition.put("_sort", "a.create_time");

condition.put("_order", "desc");

}

Set<String> warehouseStatuses = new HashSet<>();

//hbt reset

warehouseStatuses.add(FlowGlobalValueBean.WarehouseStatus.PUBLISH);

warehouseStatuses.add(FlowGlobalValueBean.WarehouseStatus.NO_PUBLISH);

warehouseStatuses.add(FlowGlobalValueBean.WarehouseStatus.SCRAP);

warehouseStatuses.add(FlowGlobalValueBean.WarehouseStatus.OUT);

warehouseStatuses.add(FlowGlobalValueBean.WarehouseStatus.WAIT_STERILIZE);

condition.put("warehouseStatuses", warehouseStatuses);

//调用service层的listFailureTime

page = packetInstanceService.listFailureTime(condition, page);

Map<String, Map<String, String>> dictData = dictService.loadDict("ssd_warehouse_status", "ssd_packet_status", "ssd_warehouse_action");

PageResponseData<TFlowPacketInstance> pageResponseData = new PageResponseData<>(page, dictData);

return ResponsePacket.generateSuccessPacket(pageResponseData);

}如果文件太大太长不好找接口的话,可以选择用idea快捷键ctrl + f快速找到该文件下的接口位置

service层:

@Transactional

public Page listFailureTime(Map<String, Object> condition, Page page) {

if (page == null) {

page = new Page();

}

int startIndex = page.getStartIndex().intValue();

int pageSize = page.getVolumePage().intValue();

Integer count = mapper.countFailureTime(condition);

if (count == null || count.intValue() == 0) {

page.setTotalCount(0);

page.setRecords(new ArrayList());

return page;

}

//调用mapper层的listFailureTime

List<Map<String, Object>> list = mapper.listFailureTime(condition, new RowBounds(startIndex, pageSize));

page.setTotalCount(count);

page.setRecords(list);

return page;

}mapper层:

<select id="listFailureTime" resultType="Map" parameterType="Map">

select

a.id as "id",

a.define_id as "defineId",

a.packet_data as "packetData",

a.status as "status",

a.dept_id as "deptId",

a.name as "name",

a.warehouse_status as "warehouseStatus",

a.create_user as "createUser",

a.create_time as "createTime",

a.last_user as "lastUser",

a.last_time as "lastTime",

a.delete_flag as "deleteFlag",

a.invalid_time as "invalidTime",

a.valid_time as "validTime",

a.action as "action",

c.expected_time as "expectedTime"

from

<include refid="table_name"/>

a

left join t_flow_sterilize_batch_instance b on a.id = b.packet_instance_id

left join t_flow_sterilize_batch c on b.sterilize_batch_id = c.id

where a.delete_flag = 0

group by a.id)

<include refid="condition_sql"/>

<include refid="failureTimeCreateUserFuzzy"></include>

<if test=" _sort !=null and _sort != ''">

order by ${_sort}

<if test="_order !=null and _order != ''">

${_order}

</if>

</if>

</select>然后就是去本地测试数据库环境进行调试,如果不想自己拼接sql的话可以选择从导出的日志文件里边拷贝sql语句,这样就不用自己一段段拼接这么辛苦了。比如下面的sql就是我从日志中直接粘贴出来的:

select a.id as "id", a.define_id as "defineId", a.packet_data as "packetData", a.status as "status", a.dept_id as "deptId", a.name as "name", a.warehouse_status as "warehouseStatus",

a.create_user as "createUser", a.create_time as "createTime", a.last_user as "lastUser", a.last_time as "lastTime", a.delete_flag as "deleteFlag", a.invalid_time as "invalidTime",

a.valid_time as "validTime", a.action as "action", c.expected_time as "expectedTime"

from t_flow_packet_instacne a

left join t_flow_sterilize_batch_instance b on a.id = b.packet_instance_id

left join t_flow_sterilize_batch c on b.sterilize_batch_id = c.id

where a.delete_flag = 0

and a.define_id= 'AF200914R3VGF3'

and a.warehouse_status in ( 1 , 2 , 3 , 4 , 6 )

order by a.create_time desc

limit 0,20这里因为实例对应的数据只有id是相同的,其他行大部分都不同,没办法用distinct来去重,就要用到某一字段的唯一性特点及group by分组。

# 模板

select * from table where id in (select max(id) from table group by [去除重复的字段名列表,....])

# 套模板

select max(c.expected_time)

from t_flow_packet_instacne a

left join t_flow_sterilize_batch_instance b on a.id = b.packet_instance_id

left join t_flow_sterilize_batch c on b.sterilize_batch_id = c.id

group by a.id上面那条sql就是只保留每一个实例最新过期时间

筛选最新的数据输出(即最大的expected_time):

select a.id as "id", a.define_id as "defineId", a.packet_data as "packetData", a.status as "status", a.dept_id as "deptId", a.name as "name", a.warehouse_status as "warehouseStatus",

a.create_user as "createUser", a.create_time as "createTime", a.last_user as "lastUser", a.last_time as "lastTime", a.delete_flag as "deleteFlag", a.invalid_time as "invalidTime",

a.valid_time as "validTime", a.action as "action", c.expected_time as "expectedTime"

from t_flow_packet_instacne a

left join t_flow_sterilize_batch_instance b on a.id = b.packet_instance_id

left join t_flow_sterilize_batch c on b.sterilize_batch_id = c.id

where a.delete_flag = 0

and c.expected_time

in (select max(c.expected_time)

from t_flow_packet_instacne a

left join t_flow_sterilize_batch_instance b on a.id = b.packet_instance_id

left join t_flow_sterilize_batch c on b.sterilize_batch_id = c.id

group by a.id)

and a.define_id= 'AF200914R3VGF3'

and a.warehouse_status in ( 1 , 2 , 3 , 4 , 6 )

order by a.create_time desc

limit 0,20然后把修改的部分粘贴到mapper里,install,重新发布新jar包就可以了。

到这就把坑给填了,果然处理了一个bug就会诞生更多的bug。

附送一张BUG处理流程(Boss画的):